Gina Jihyeon Lee

AI Research Scientist

gina.ai at kakaobrain dot com

About Me

I am interested in addressing

trustworthy and practical AI/ML challenges in the real world. My recent efforts center on enhancing the coding and reasoning capabilities of LLMs and employing LLMs as tool agents.

In the past, I have contributed to projects addressing large-scale foundation model training, in-context learning optimization, fair and robust language generation, multimodal question-answering, and unbiased representation learning.

I am also interested in the robustness and fairness of language models, knowledge-augmented LMs, and multimodal generative AI.Latest News

Sep 2023 🎤 Invited talk at Singapore Management UniversityJun 2023 🚀 Promoted to Project Leader at Kakao BrainJan 2023 🏆 Paper accepted to EACL 2023 findingsAug 2022 🏆 Paper accepted to WACV 2023Feb 2022 🎓 Officially a Master in Artificial IntelligenceOct 2021 🚀 Working at Kakao Brain as an AI Research ScientistOct 2021 🏆 Paper accepted to NeurIPS 2021 as an Oral presentationJul 2021 🏆 Paper accepted to ICCV 2021Jul 2021 📍 Starting my internship at Kakao BrainAug 2020 📍 Working at Naver Papago as a Collaborative ResearcherAug 2020 🎓 Graduated cum laude with a Bachelor in Industrial Engineering & Business ManagementAug 2020 🚀 Working at Classum as a Data Analyst&MarketerPublications

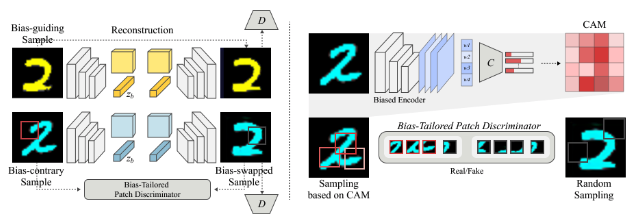

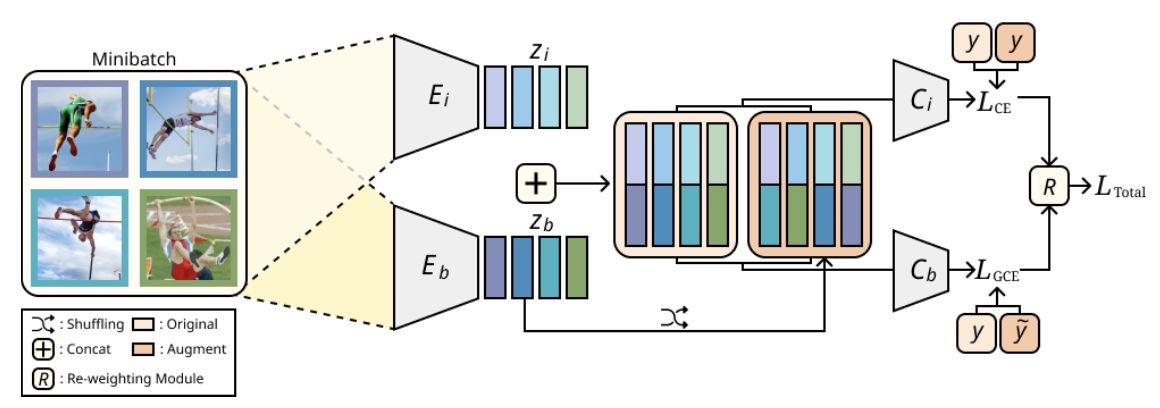

BiaSwap: Removing Dataset Bias with Bias-Tailored Swapping Augmentation

Eungyeup Kim*,Jihyeon Lee*, Jaegul Choo

International Conference on Computer Vision (ICCV), 2021, Accepted

[Paper]

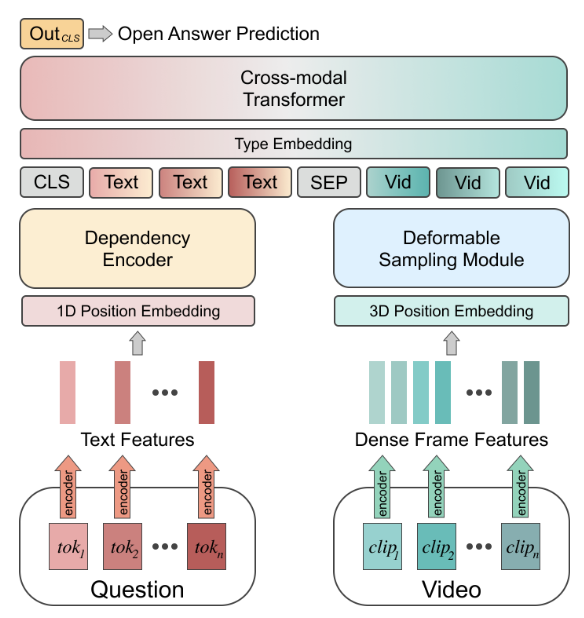

Dense but Efficient VideoQA for Intricate Compositional Reasoning

Jihyeon Lee*, Wooyoung Kang*, Eunsol Kim

Winter Conference on Applications of Computer Vision (WACV), 2023, Accepted

[Paper]

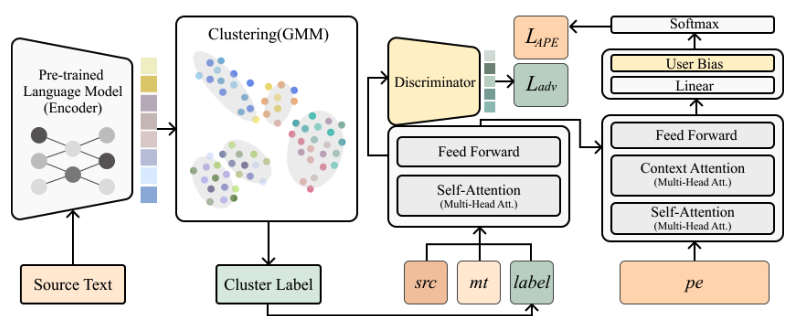

PePe: Personalized Post-editing Model utilizing User-generated Post-edits

Jihyeon Lee*, Taehee Kim*, Yunwon Tae*, Chunbok Park, Jaegul Choo

European Chapter of the Association for Computational Linguistics (EACL), 2023, Accepted

[Paper]

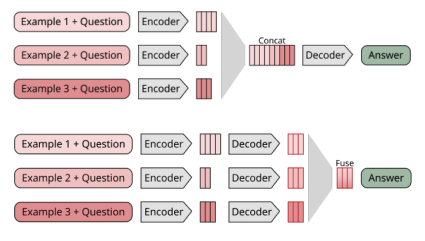

Exploiting the Potential of Seq2Seq Models as Robust Few-Shot Learners

Jihyeon Lee*, Dain Kim*, Doohae Jung*, Boseop Kim, Kyoung-Woon On

ArXiv preprinted

[Paper]

Social Links

- Github: https://github.com/jglovier/resume-template

- Twitter: http://twitter.com/jglovier

- Dribbble: https://dribbble.com/jag

- LinkedIn: https://www.linkedin.com/in/joelglovier

- Website: http://joelglovier.com